강의 요약 - CS224n: Natural Language Processing with Deep Learning (2)

29 Aug 2022

Reading time ~2 minutes

I. Contents

- BackProp and Neural Networks

- Simple NER

- Backpropagation

- Dependency Parsing

- Syntactic Structure : Constituency and Dependency

- Dependecy Grammar and Treebanks

- Transition-based dependency parsing

- Summary

II. BackProp and Neural Networks

-

Simple NER : Window classification using binary logistic classifier

-

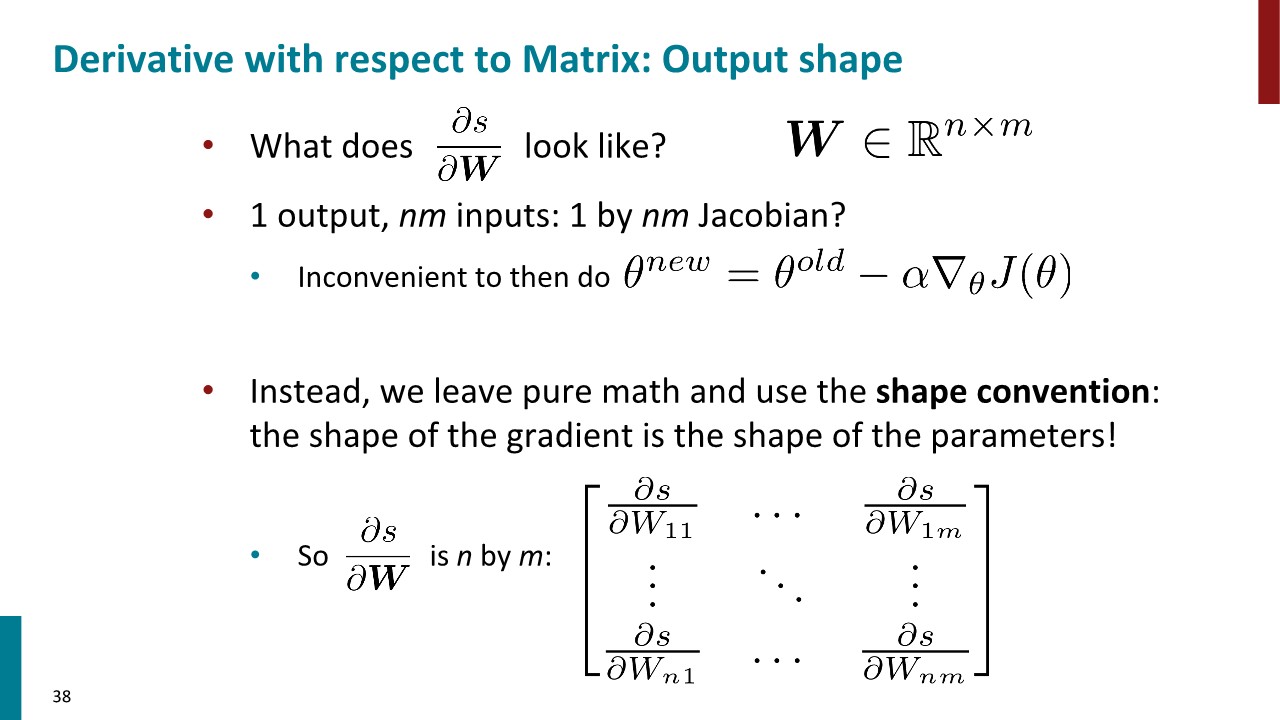

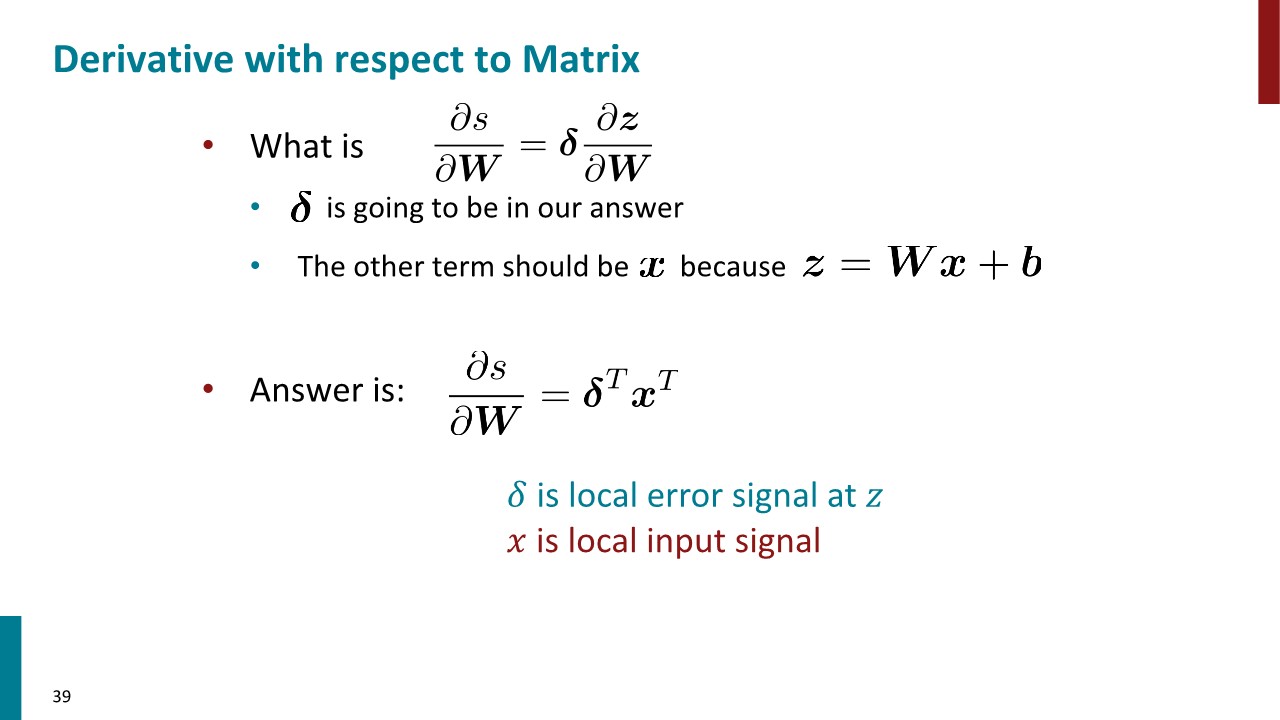

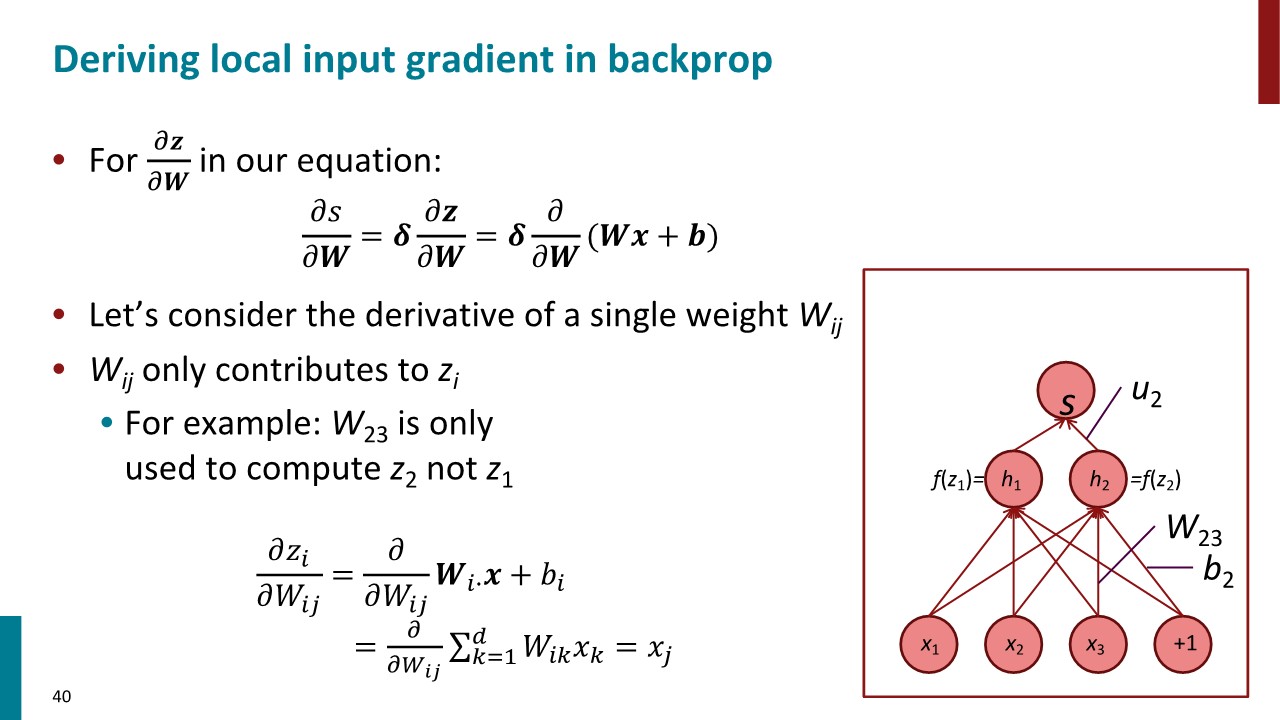

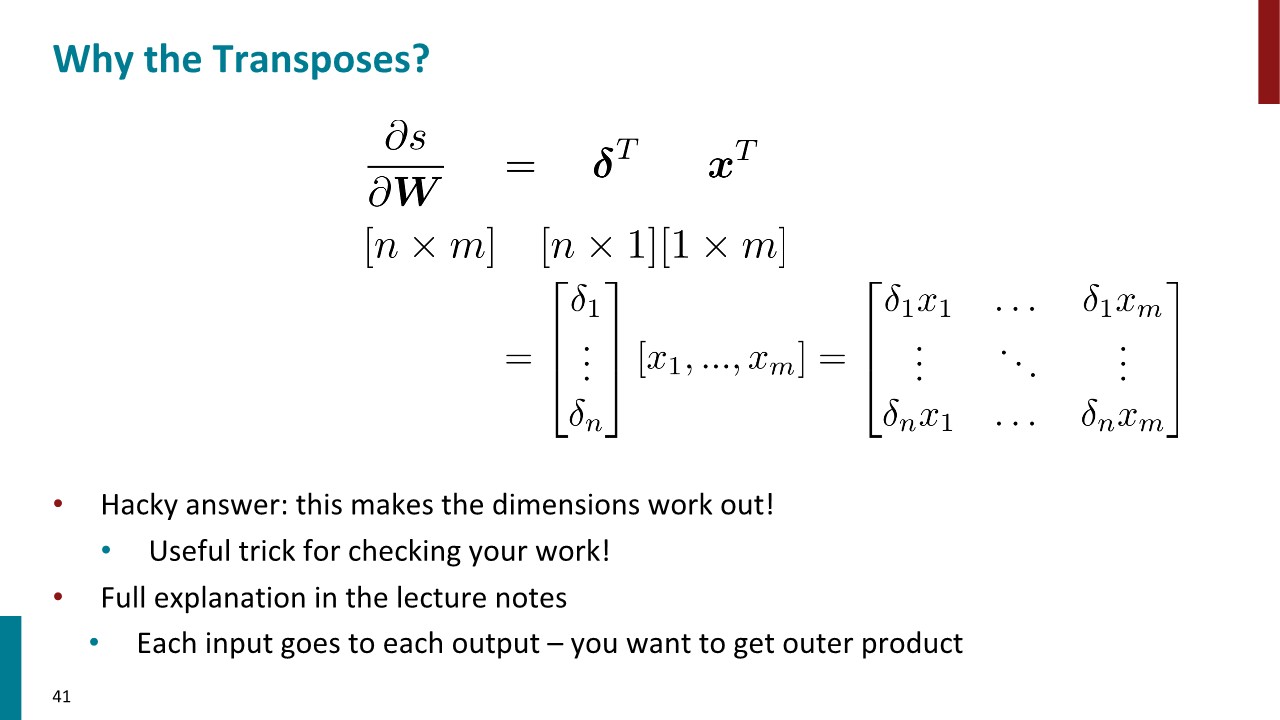

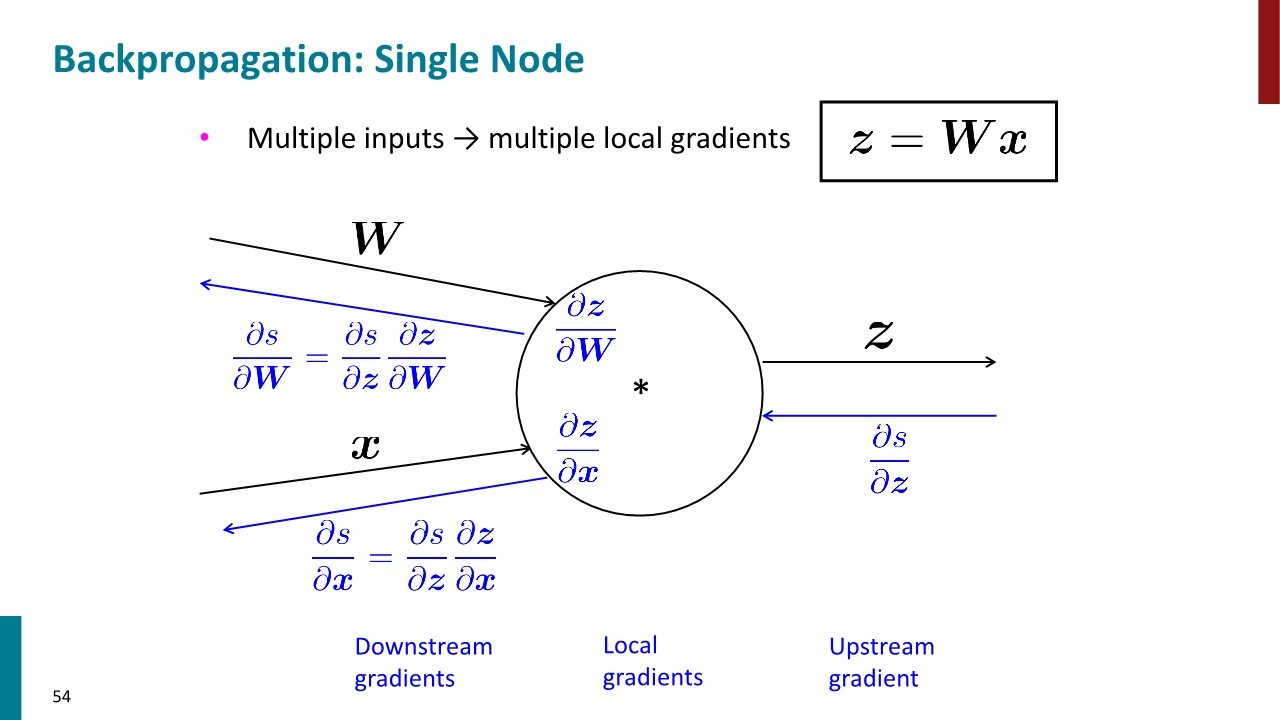

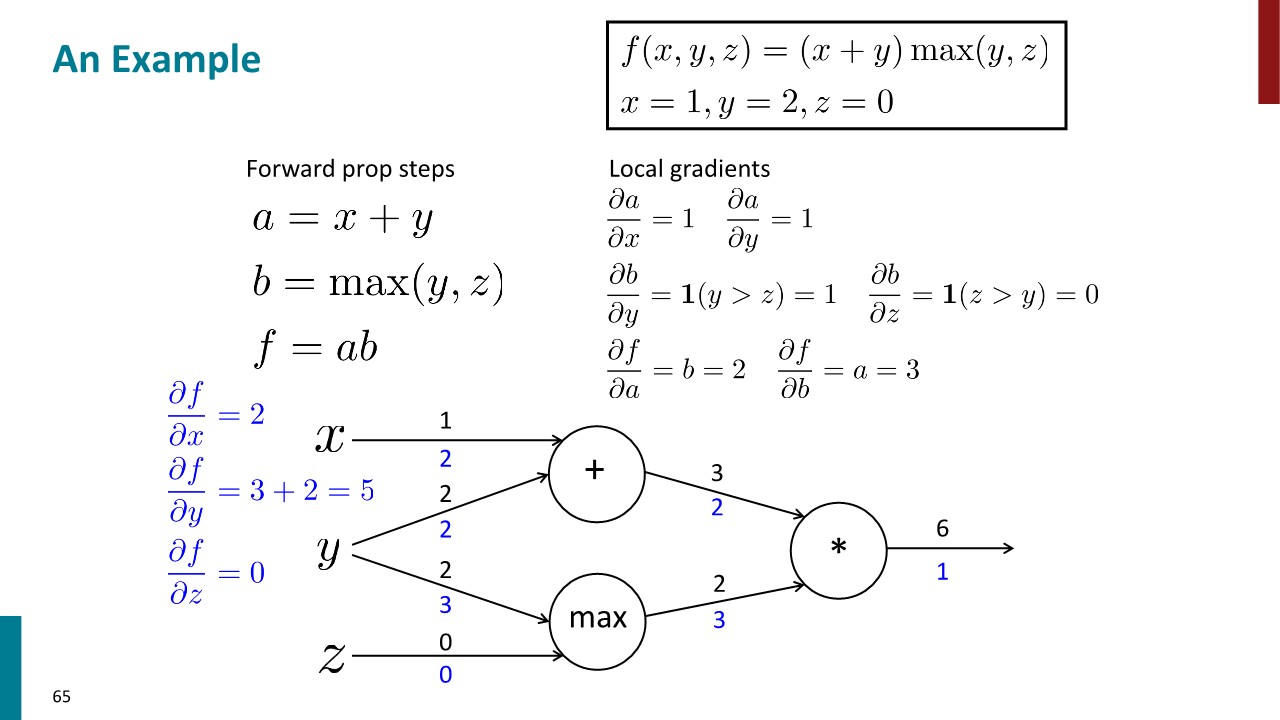

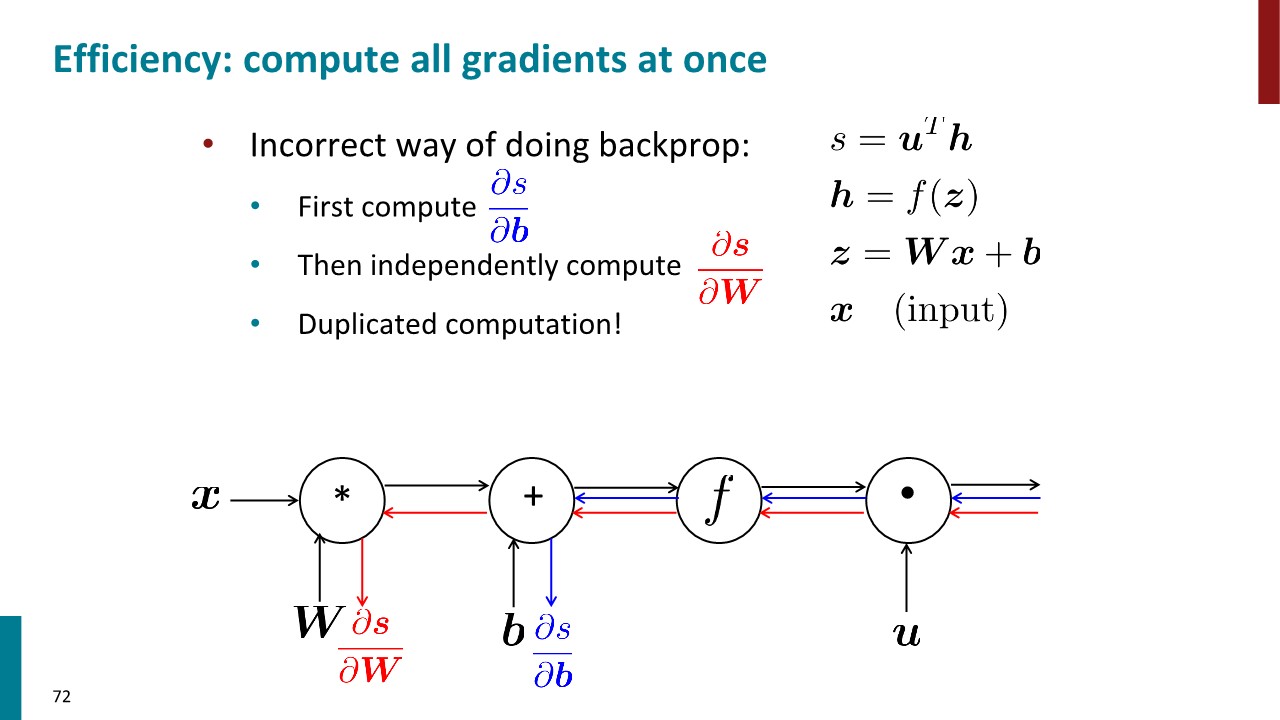

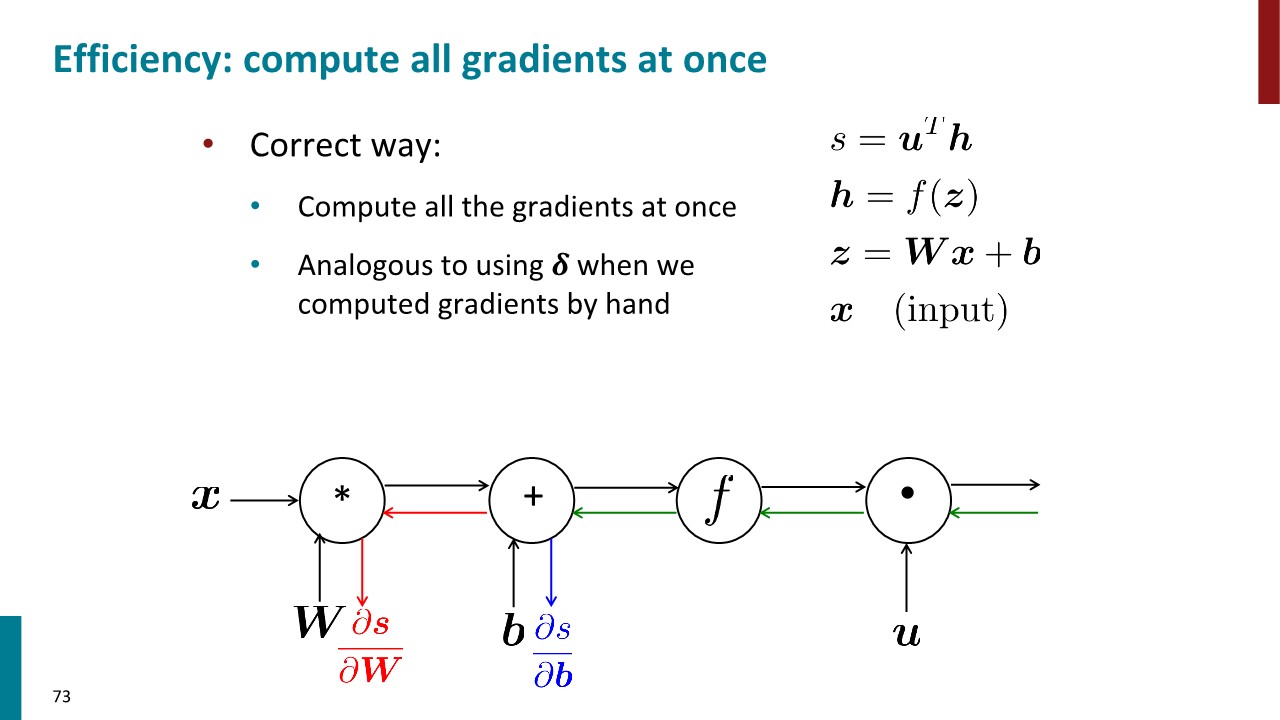

Backpropagation

CS231n에서 배웠던 내용과 대부분 중복되므로, 이해하는데 유용한 슬라이드 몇 장만 아카이브.

III. Dependency Parsing

1. Syntactic Structure : Constituency and Dependency

(1) Constituency

Phrase structure organizes words into nested constituents.

- Starting unit : words

- the, cat, cuddly, by, door

- Words combine into phrases

- the cuddly cat, by the door

- Phrases can combine into bigger phrases

- the cuddly cat by the door

(2) Dependency

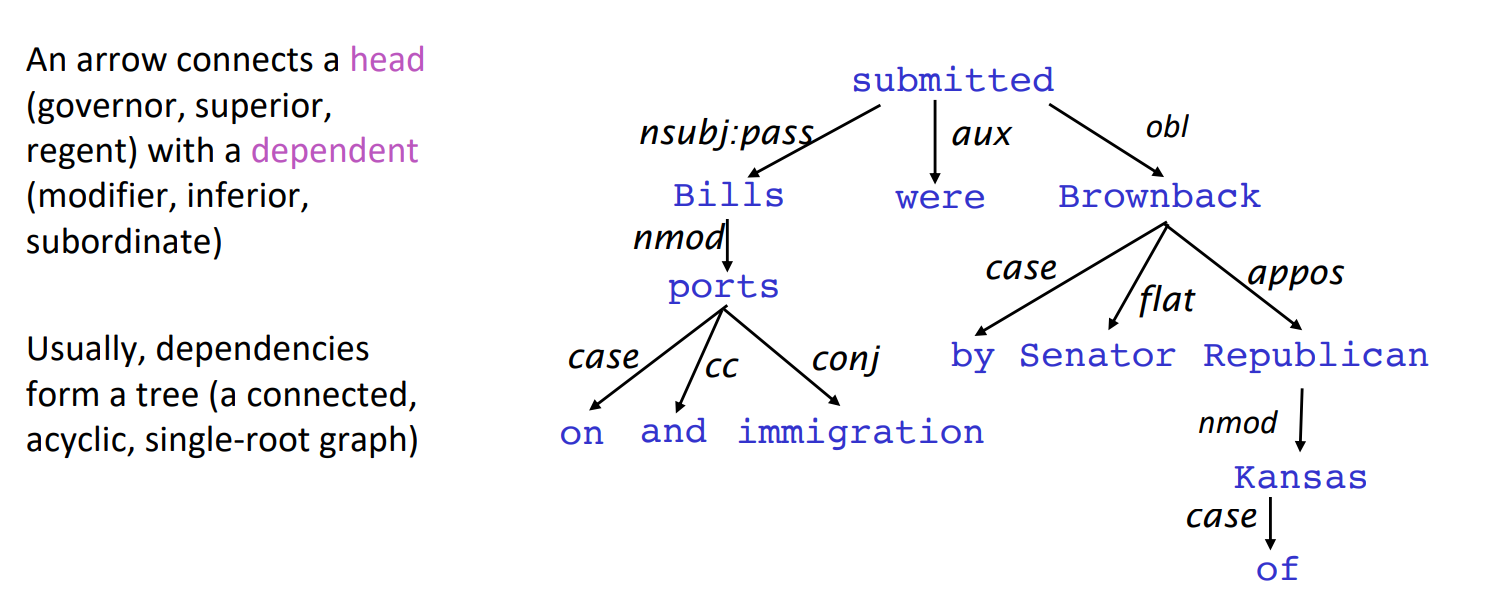

Dependency structure shows which words depend on (modify, attach to, or are arguments of) which other words.

(3) Sentence structure

Why do we need sentence structure?

Humans communicate complex ideas by composing words together into bigger units to convey complex meanings. Listeners need to work out what modifies what. A model needs to understand sentence structure in order to be able to interpret language correctly.

Ambiguities

- Prepositional phrase attachment ambiguity

- Coordination scope ambiguity

- Adjectival/Advebial modifier ambiguity

- Verb phrase attachment ambiguity

2. Dependecy Grammar and Treebanks

(1) Dependecy Grammar

Dependency syntax postulates that syntactic structure consists of relations between lexical itemns, normally binary asymmetric relations (“arrows”) called dependencies

We usually add a fake ROOT so every word is a dependent of precisely 1 other node.

(2) Treebanks

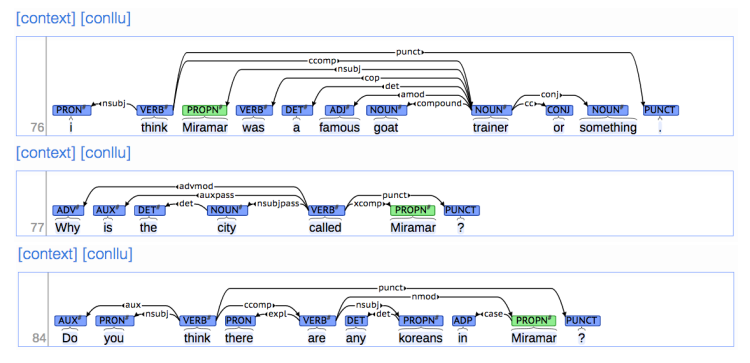

The rise of annotated data & Universal dependencies treebanks

A treebank gives us many things

- Reusability of the labor

- Broad coverage, not just a few intuitions

- Frequencies and distributional information

- A way to evaluate NLP systems

Sources of information for dependency parsing

- Bilexical affinities

- Dependecy distances

- Intervening material

- Valency of heads

3. Transition-based dependency parsing

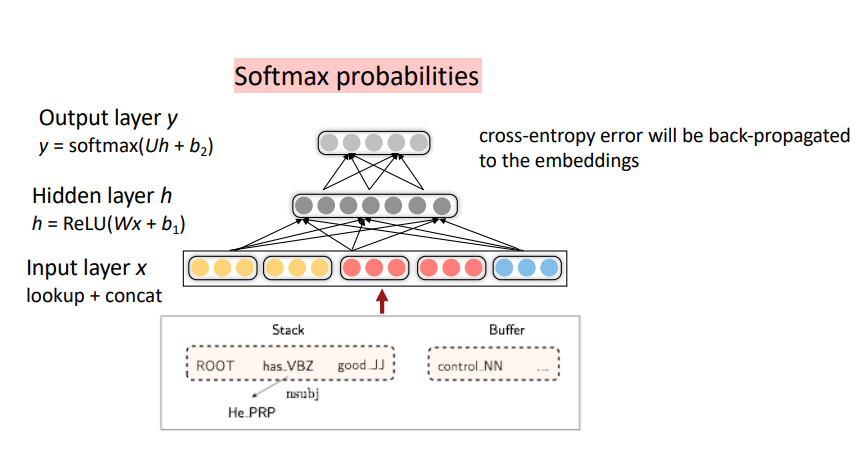

Basic transition-based dependency parser

- Arc-standard transition-based parser

- 3 actions : SHIFT, LECT-ARC, RIGHT-ARC

- MaltParser

- Each action is predicted by a discriminative classfier over each legal move.

- It provides very fast linear time parsing, with high accuracy - great for parsing the web.

4. Neural dependency parsing

Neural networds can accurately determine the structure of sentences, supporting interpretation.

IV. Summary

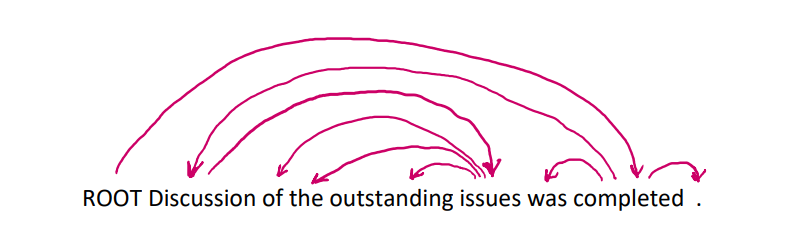

문장을 이해하기 위해서는 각 단어가 어떤 단어를 수식하고 있는지 그 관계를 파악해야한다. 이를 Dependency라고 하며, 주어진 문장에 대해 그 수식 관계를 파악하는 것을 Dependency parsing이라고 한다. 수많은 corpus에 대해 Dependency parsing을 하는 것은 NLP에서 중요한 과제 중 하나이다.

Transition-based dependency parsing은 Stack, Buffer 개념을 도입하여 알고리즘으로 Dependency parsing을 하는 기법이다. MaltParser은 머신러닝을 이용해 높은 성능을 보여주었으며, 비교적 최근 인공신경망을 이용한 Neural dependency parsing은 가장 강력한 기법 중 하나로 자리잡았다. 그 외에도 Graph-based dependency parser 등이 있다.